Apple has been developing an autonomous guidance system for cars for some time, it was originally intended for a car produced by the American company. Considering that that project was canceled by the Apple company, those from Cupertino continue with one based on the development of an autonomous guidance system for cars.

This system would be sold by Apple to car manufacturers, and today we learn some interesting information about it. Apple relies on LIDAR technology to create this system, it being able to recognize pedestrians, cars and cyclists from long distances, a series of cameras and special software being used for this process described here.

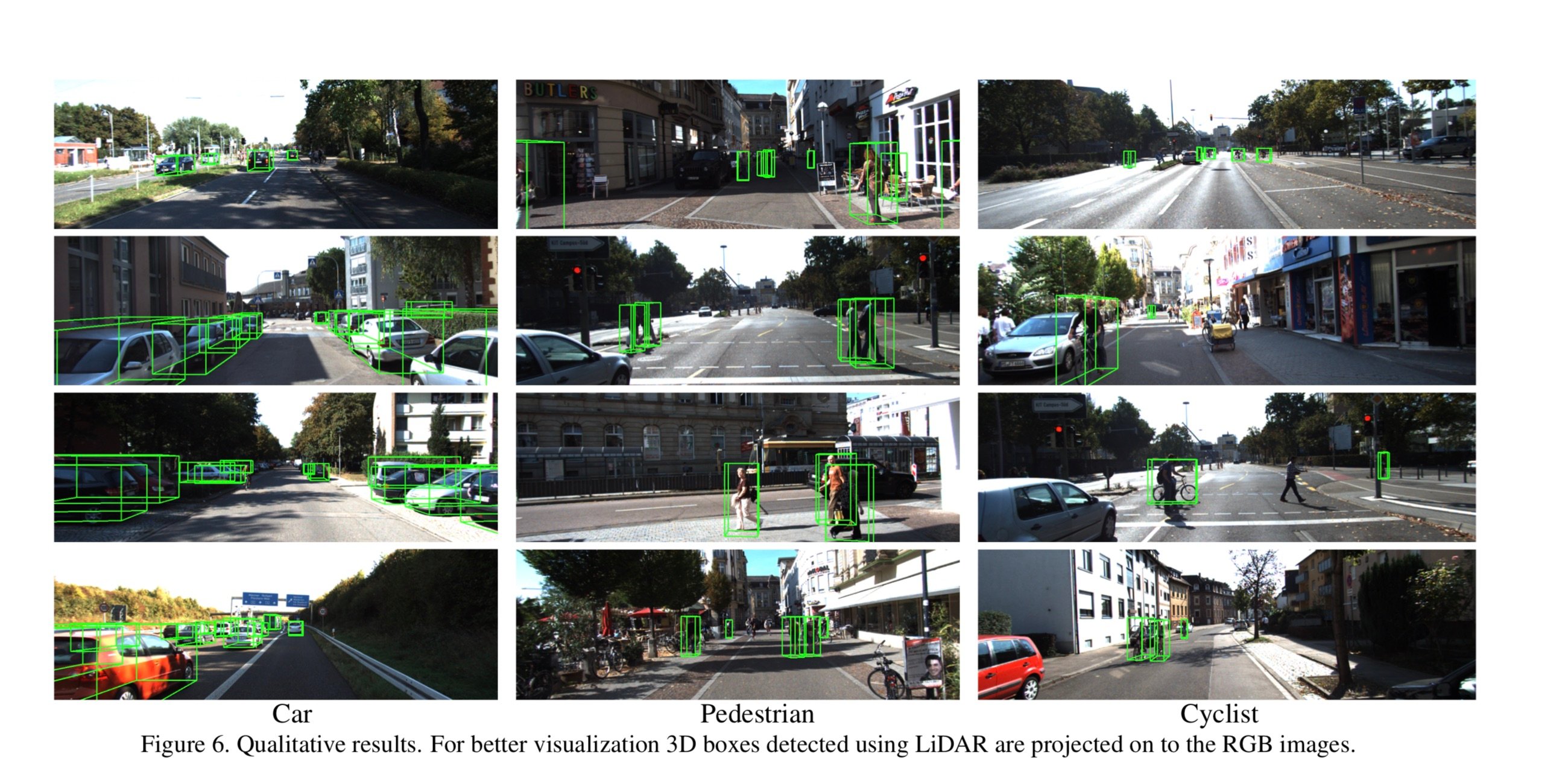

"Accurate detection of objects in 3D point clouds is a central problem in many applications, such as autonomous navigation, housekeeping robots, and augmented/virtual reality. To interface a highly sparse LiDAR point cloud with a region proposal network (RPN), most existing efforts have focused on hand-crafted feature representations, for example, a bird's eye view projection. In this work, we remove the need of manual feature engineering for 3D point clouds and propose VoxelNet, a generic 3D detection network that unifies feature extraction and bounding box prediction into a single stage, end-to-end trainable deep network."

Apple claims that the technology it developed can recognize people and cyclists better than other similar technologies that aim to detect 3D objects. For now, Apple has only done software experiments, without moving to real tests, so the technology is not completely ready to be implemented in any automobile.

Until that could happen, Apple allows its engineers to publish works related to the technology they are developing. The purpose of this process is to bring the information to as many people as possible so that they can get help in developing the software and hardware, but it remains to be seen how successful they will be.

"Most existing methods in LiDAR-based 3D detection rely on hand-crafted feature representations, for example, a bird's eye view projection. In this paper, we remove the bottleneck of manual feature engineering and propose Vox-elNet, a novel end-to-end trainable deep architecture for point cloud based 3D detection. Our approach can operate directly on sparse 3D points and capture 3D shape information effectively. We also present an efficient implementation of VoxelNet that benefits from point cloud sparsity and parallel processing on a voxel grid."

The Apple company is very determined to bring this autonomous guidance system to the market, it is expected by many people and it will be extremely interesting to see if it will be successful or not.

"Our experiments on the KITTI car detection task show that VoxelNet outperforms state-of-the-art LiDAR based 3D detection methods by a large margin. On more challenging tasks, such as 3D detection of pedestrians and cyclists, VoxelNet also demonstrates encouraging results showing that it provides a better 3D representation".